James Gleick, author of The Information: A History, A Theory, A Flood, puts it rather elegantly:

We’re in the habit of associating value with scarcity, but the digital world unlinks them. You can be the sole owner of a Jackson Pollock or a Blue Mauritius but not of a piece of information — not for long, anyway. Nor is obscurity a virtue. A hidden parchment page enters the light when it molts into a digital simulacrum. It was never the parchment that mattered.

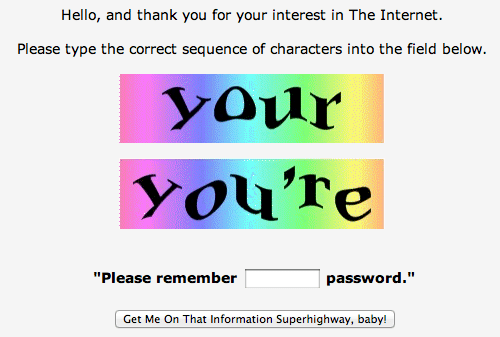

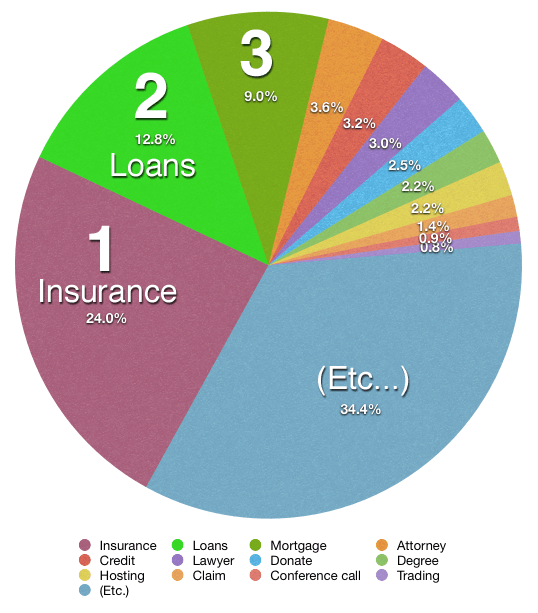

Historically, the two main types of obstacles to information discovery have been barriers of awareness, which encompass all the information we can’t access because we simply don’t know about its existence in the first place, and barriers of accessibility, which refer to the information we do know is out there but remains outside of our practical, infrastructural or legal reach. What the digital convergence has done is solve the latter, by bringing much previously inaccessible information into the public domain, made the former worse in the process, by increasing the net amount of information available to us and thus creating a wealth of information we can’t humanly be aware of due to our cognitive and temporal limitations, and added a third barrier — a barrier of motivation.

Fascinating article.

I do a lot of curation, here and on other blogs, but I’d like to start doing it in a more structured manner – adding more context, building a bigger picture, etc.